|

| | GLayerRestrictedBoltzmannMachine (size_t inputs, size_t outputs, GActivationFunction *pActivationFunction=NULL) |

| | General-purpose constructor. Takes ownership of pActivationFunction. More...

|

| |

| | GLayerRestrictedBoltzmannMachine (GDomNode *pNode) |

| | Deserializing constructor. More...

|

| |

| | ~GLayerRestrictedBoltzmannMachine () |

| |

| virtual void | activate () |

| | Applies the activation function to the net vector to compute the activation vector. More...

|

| |

| virtual double * | activation () |

| | Returns the activation values on the hidden end. More...

|

| |

| double * | activationReverse () |

| | Returns the activation for the visible end of this layer. More...

|

| |

| virtual void | backPropError (GNeuralNetLayer *pUpStreamLayer, size_t inputStart=0) |

| | Backpropagates the error from this layer into the upstream layer's error vector. (Assumes that the error in this layer has already been deactivated. The error this computes is with respect to the output of the upstream layer.) More...

|

| |

| double * | bias () |

| | Returns the bias for the hidden end of this layer. More...

|

| |

| const double * | bias () const |

| | Returns the bias for the hidden end of this layer. More...

|

| |

| double * | biasDelta () |

| | Returns the delta vector for the bias. More...

|

| |

| double * | biasReverse () |

| | Returns the bias for the visible end of this layer. More...

|

| |

| double * | biasReverseDelta () |

| | Returns the delta vector for the reverse bias. More...

|

| |

| virtual void | computeError (const double *pTarget) |

| | Computes the error terms associated with the output of this layer, given a target vector. (Note that this is the error of the output, not the error of the weights. To obtain the error term for the weights, deactivateError must be called.) More...

|

| |

| void | contrastiveDivergence (GRand &rand, const double *pVisibleSample, double learningRate, size_t gibbsSamples=1) |

| | Refines this layer by contrastive divergence. pVisibleSample should point to a vector of inputs that will be presented to this layer. More...

|

| |

| virtual void | copyBiasToNet () |

| | Copies the bias vector into the net vector. More...

|

| |

| virtual void | copyWeights (GNeuralNetLayer *pSource) |

| | Copy the weights from pSource to this layer. (Assumes pSource is the same type of layer.) More...

|

| |

| virtual size_t | countWeights () |

| | Returns the number of double-precision elements necessary to serialize the weights of this layer into a vector. More...

|

| |

| virtual void | deactivateError () |

| | Multiplies each element in the error vector by the derivative of the activation function. This results in the error having meaning with respect to the weights, instead of the output. More...

|

| |

| virtual void | diminishWeights (double amount, bool regularizeBiases) |

| | Diminishes all the weights (that is, moves them in the direction toward 0) by the specified amount. More...

|

| |

| void | drawSample (GRand &rand, size_t iters) |

| | Draws a sample observation from "iters" iterations of Gibbs sampling. The resulting sample is placed in activationReverse(), and the corresponding encoding will be in activation(). More...

|

| |

| virtual void | dropConnect (GRand &rand, double probOfDrop) |

| | Randomly sets some of the weights to 0. (The dropped weights are restored when you call updateWeightsAndRestoreDroppedOnes.) More...

|

| |

| virtual void | dropOut (GRand &rand, double probOfDrop) |

| | Randomly sets the activation of some units to 0. More...

|

| |

| virtual double * | error () |

| | Returns a buffer used to store error terms for each unit in this layer. More...

|

| |

| double * | errorReverse () |

| | Returns the error term for the visible end of this layer. More...

|

| |

| void | feedBackward (const double *pIn) |

| | Feed a vector from the hidden end to the visible end. The results are placed in activationReverse();. More...

|

| |

| virtual void | feedIn (const double *pIn, size_t inputStart, size_t inputCount) |

| | Feeds a portion of the inputs through the weights and updates the net. More...

|

| |

| double | freeEnergy (const double *pVisibleSample) |

| | Returns the free energy of this layer. More...

|

| |

| virtual size_t | inputs () |

| | Returns the number of visible units. More...

|

| |

| virtual void | maxNorm (double max) |

| | Scales weights if necessary such that the manitude of the weights (not including the bias) feeding into each unit are <= max. More...

|

| |

| double * | net () |

| | Returns the net vector (that is, the values computed before the activation function was applied) from the most recent call to feedForward(). More...

|

| |

| double * | netReverse () |

| | Returns the net for the visible end of this layer. More...

|

| |

| virtual size_t | outputs () |

| | Returns the number of hidden units. More...

|

| |

| virtual void | perturbWeights (GRand &rand, double deviation, size_t start=0, size_t count=INVALID_INDEX) |

| | Perturbs the weights that feed into the specifed units with Gaussian noise. Also perturbs the bias. start specifies the first unit whose incoming weights are perturbed. count specifies the maximum number of units whose incoming weights are perturbed. The default values for these parameters apply the perturbation to all units. More...

|

| |

| virtual void | renormalizeInput (size_t input, double oldMin, double oldMax, double newMin=0.0, double newMax=1.0) |

| | Adjusts weights such that values in the new range will result in the same behavior that previously resulted from values in the old range. More...

|

| |

| void | resampleHidden (GRand &rand) |

| | Performs binomial resampling of the activation values on the output end of this layer. More...

|

| |

| void | resampleVisible (GRand &rand) |

| | Performs binomial resampling of the activation values on the input end of this layer. More...

|

| |

| virtual void | resetWeights (GRand &rand) |

| | Initialize the weights with small random values. More...

|

| |

| virtual void | resize (size_t inputs, size_t outputs, GRand *pRand=NULL, double deviation=0.03) |

| | Resizes this layer. If pRand is non-NULL, then it preserves existing weights when possible and initializes any others to small random values. More...

|

| |

| virtual void | scaleUnitIncomingWeights (size_t unit, double scalar) |

| | Scale weights that feed into the specified unit. More...

|

| |

| virtual void | scaleUnitOutgoingWeights (size_t input, double scalar) |

| | Scale weights that feed into this layer from the specified input. More...

|

| |

| virtual void | scaleWeights (double factor, bool scaleBiases) |

| | Multiplies all the weights in this layer by the specified factor. More...

|

| |

| virtual GDomNode * | serialize (GDom *pDoc) |

| | Marshall this layer into a DOM. More...

|

| |

| virtual const char * | type () |

| | Returns the type of this layer. More...

|

| |

| virtual double | unitIncomingWeightsL1Norm (size_t unit) |

| | Compute the L1 norm (sum of absolute values) of weights feeding into the specified unit. More...

|

| |

| virtual double | unitIncomingWeightsL2Norm (size_t unit) |

| | Compute the L2 norm (sum of squares) of weights feeding into the specified unit. More...

|

| |

| virtual double | unitOutgoingWeightsL1Norm (size_t input) |

| | Compute the L1 norm (sum of absolute values) of weights feeding into this layer from the specified input. More...

|

| |

| virtual double | unitOutgoingWeightsL2Norm (size_t input) |

| | Compute the L2 norm (sum of squares) of weights feeding into this layer from the specified input. More...

|

| |

| virtual void | updateBias (double learningRate, double momentum) |

| | Updates the bias of this layer by gradient descent. (Assumes the error has already been computed and deactivated.) More...

|

| |

| virtual void | updateWeights (const double *pUpStreamActivation, size_t inputStart, size_t inputCount, double learningRate, double momentum) |

| | Adjust weights that feed into this layer. (Assumes the error has already been deactivated.) More...

|

| |

| virtual void | updateWeightsAndRestoreDroppedOnes (const double *pUpStreamActivation, size_t inputStart, size_t inputCount, double learningRate, double momentum) |

| | This is a special weight update method for use with drop-connect. It updates the weights, and restores the weights that were previously dropped by a call to dropConnect. More...

|

| |

| virtual size_t | vectorToWeights (const double *pVector) |

| | Deserialize from a vector to the weights in this layer. Return the number of elements consumed. More...

|

| |

| GMatrix & | weights () |

| | Returns a reference to the weights matrix of this layer. More...

|

| |

| virtual size_t | weightsToVector (double *pOutVector) |

| | Serialize the weights in this layer into a vector. Return the number of elements written. More...

|

| |

| | GNeuralNetLayer () |

| |

| virtual | ~GNeuralNetLayer () |

| |

| virtual void | copySingleNeuronWeights (size_t source, size_t dest) |

| |

| void | feedForward (const double *pIn) |

| | Feeds in the bias and pIn, then computes the activation of this layer. More...

|

| |

| virtual void | feedIn (GNeuralNetLayer *pUpStreamLayer, size_t inputStart) |

| | Feeds the previous layer's activation into this layer. (Implementations for specialized hardware may override this method to avoid shuttling the previous layer's activation back to host memory.) More...

|

| |

| GMatrix * | feedThrough (const GMatrix &data) |

| | Feeds a matrix through this layer, one row at-a-time, and returns the resulting transformed matrix. More...

|

| |

| virtual void | getWeightsSingleNeuron (size_t outputNode, double *&weights) |

| | Gets the weights and bias of a single neuron. More...

|

| |

| virtual void | setWeightsSingleNeuron (size_t outputNode, const double *weights) |

| | Gets the weights and bias of a single neuron. More...

|

| |

| virtual void | updateWeights (GNeuralNetLayer *pUpStreamLayer, size_t inputStart, double learningRate, double momentum) |

| | Refines the weights by gradient descent. More...

|

| |

| virtual void | updateWeightsAndRestoreDroppedOnes (GNeuralNetLayer *pUpStreamLayer, size_t inputStart, double learningRate, double momentum) |

| | Refines the weights by gradient descent. More...

|

| |

| virtual bool | usesGPU () |

| | Returns true iff this layer does its computations in parallel on a GPU. More...

|

| |

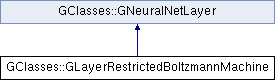

Public Member Functions inherited from GClasses::GNeuralNetLayer

Public Member Functions inherited from GClasses::GNeuralNetLayer Static Public Member Functions inherited from GClasses::GNeuralNetLayer

Static Public Member Functions inherited from GClasses::GNeuralNetLayer Protected Member Functions inherited from GClasses::GNeuralNetLayer

Protected Member Functions inherited from GClasses::GNeuralNetLayer