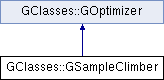

This is a variant of empirical gradient descent that tries to estimate the gradient using a minimal number of samples. It is more efficient than empirical gradient descent, but it only works well if the optimization surface is quite locally linear.

More...

#include <GHillClimber.h>

|

| | GSampleClimber (GTargetFunction *pCritic, GRand *pRand) |

| |

| virtual | ~GSampleClimber () |

| |

| virtual double * | currentVector () |

| | Returns the best vector yet found. More...

|

| |

| virtual double | iterate () |

| | Performs a little more optimization. (Call this in a loop until acceptable results are found.) More...

|

| |

| void | setAlpha (double d) |

| | Sets the alpha value. It should be small (like 0.01) A very small value updates the gradient estimate slowly, but precisely. A bigger value updates the estimate quickly, but never converges very close to the precise gradient. More...

|

| |

| void | setStepSize (double d) |

| | Sets the current step size. More...

|

| |

| | GOptimizer (GTargetFunction *pCritic) |

| |

| virtual | ~GOptimizer () |

| |

| double | searchUntil (size_t nBurnInIterations, size_t nIterations, double dImprovement) |

| | This will first call iterate() nBurnInIterations times, then it will repeatedly call iterate() in blocks of nIterations times. If the error heuristic has not improved by the specified ratio after a block of iterations, it will stop. (For example, if the error before the block of iterations was 50, and the error after is 49, then training will stop if dImprovement is > 0.02.) If the error heuristic is not stable, then the value of nIterations should be large. More...

|

| |

This is a variant of empirical gradient descent that tries to estimate the gradient using a minimal number of samples. It is more efficient than empirical gradient descent, but it only works well if the optimization surface is quite locally linear.

| virtual GClasses::GSampleClimber::~GSampleClimber |

( |

| ) |

|

|

virtual |

| virtual double* GClasses::GSampleClimber::currentVector |

( |

| ) |

|

|

inlinevirtual |

| virtual double GClasses::GSampleClimber::iterate |

( |

| ) |

|

|

virtual |

Performs a little more optimization. (Call this in a loop until acceptable results are found.)

Implements GClasses::GOptimizer.

| void GClasses::GSampleClimber::reset |

( |

| ) |

|

|

protected |

| void GClasses::GSampleClimber::setAlpha |

( |

double |

d | ) |

|

|

inline |

Sets the alpha value. It should be small (like 0.01) A very small value updates the gradient estimate slowly, but precisely. A bigger value updates the estimate quickly, but never converges very close to the precise gradient.

| void GClasses::GSampleClimber::setStepSize |

( |

double |

d | ) |

|

|

inline |

Sets the current step size.

| double GClasses::GSampleClimber::m_alpha |

|

protected |

| size_t GClasses::GSampleClimber::m_dims |

|

protected |

| double GClasses::GSampleClimber::m_dStepSize |

|

protected |

| double GClasses::GSampleClimber::m_error |

|

protected |

| double* GClasses::GSampleClimber::m_pCand |

|

protected |

| double* GClasses::GSampleClimber::m_pDir |

|

protected |

| double* GClasses::GSampleClimber::m_pGradient |

|

protected |

| GRand* GClasses::GSampleClimber::m_pRand |

|

protected |

| double* GClasses::GSampleClimber::m_pVector |

|

protected |

Public Member Functions inherited from GClasses::GOptimizer

Public Member Functions inherited from GClasses::GOptimizer Protected Attributes inherited from GClasses::GOptimizer

Protected Attributes inherited from GClasses::GOptimizer