|

| | GLayerConvolutional2D (size_t inputCols, size_t inputRows, size_t inputChannels, size_t kernelSize, size_t kernelsPerChannel, GActivationFunction *pActivationFunction=NULL) |

| | General-purpose constructor. For example, if your input is a 64x48 color (RGB) image, then inputCols will be 64, inputRows will be 48, and inputChannels will be 3. The total input size will be 9216 (64*48*3=9216). If kernelSize is 5, then the output will consist of 60 columns (64-5+1=60) and 44 rows (48-5+1=44). If kernelsPerChannel is 2, then there will be 6 (3*2=6) channels in the output, for a total of 15840 (60*44*6=15840) output values. (kernelSize must be <= inputSamples.) More...

|

| |

| | GLayerConvolutional2D (GDomNode *pNode) |

| | Deserializing constructor. More...

|

| |

| virtual | ~GLayerConvolutional2D () |

| |

| virtual void | activate () |

| | Applies the activation function to the net vector to compute the activation vector. More...

|

| |

| virtual double * | activation () |

| | Returns the activation values from the most recent call to feedForward(). More...

|

| |

| virtual void | backPropError (GNeuralNetLayer *pUpStreamLayer, size_t inputStart=0) |

| | Backpropagates the error from this layer into the upstream layer's error vector. (Assumes that the error in this layer has already been computed and deactivated. The error this computes is with respect to the output of the upstream layer.) More...

|

| |

| double * | bias () |

| |

| double * | biasDelta () |

| |

| virtual void | computeError (const double *pTarget) |

| | Computes the error terms associated with the output of this layer, given a target vector. (Note that this is the error of the output, not the error of the weights. To obtain the error term for the weights, deactivateError must be called.) More...

|

| |

| virtual void | copyBiasToNet () |

| | Copies the bias vector into the net vector. More...

|

| |

| virtual void | copyWeights (GNeuralNetLayer *pSource) |

| | Copy the weights from pSource to this layer. (Assumes pSource is the same type of layer.) More...

|

| |

| virtual size_t | countWeights () |

| | Returns the number of double-precision elements necessary to serialize the weights of this layer into a vector. More...

|

| |

| virtual void | deactivateError () |

| | Multiplies each element in the error vector by the derivative of the activation function. This results in the error having meaning with respect to the weights, instead of the output. (Assumes the error for this layer has already been computed.) More...

|

| |

| virtual void | diminishWeights (double amount, bool regularizeBiases) |

| | Diminishes all the weights (that is, moves them in the direction toward 0) by the specified amount. More...

|

| |

| virtual void | dropConnect (GRand &rand, double probOfDrop) |

| | Throws an exception, because convolutional layers do not support dropConnect. More...

|

| |

| virtual void | dropOut (GRand &rand, double probOfDrop) |

| | Randomly sets the activation of some units to 0. More...

|

| |

| virtual double * | error () |

| | Returns a buffer used to store error terms for each unit in this layer. More...

|

| |

| virtual void | feedIn (const double *pIn, size_t inputStart, size_t inputCount) |

| | Feeds a portion of the inputs through the weights and updates the net. More...

|

| |

| virtual size_t | inputs () |

| | Returns the number of values expected to be fed as input into this layer. More...

|

| |

| GMatrix & | kernels () |

| |

| virtual void | maxNorm (double max) |

| | Clips each kernel weight (not including the bias) to fall between -max and max. More...

|

| |

| double * | net () |

| | Returns the net vector (that is, the values computed before the activation function was applied) from the most recent call to feedForward(). More...

|

| |

| virtual size_t | outputs () |

| | Returns the number of nodes or units in this layer. More...

|

| |

| virtual void | perturbWeights (GRand &rand, double deviation, size_t start, size_t count) |

| | Perturbs the weights that feed into the specifed units with Gaussian noise. start specifies the first unit whose incoming weights are perturbed. count specifies the maximum number of units whose incoming weights are perturbed. More...

|

| |

| virtual void | renormalizeInput (size_t input, double oldMin, double oldMax, double newMin=0.0, double newMax=1.0) |

| | Throws an exception. More...

|

| |

| virtual void | resetWeights (GRand &rand) |

| | Initialize the weights with small random values. More...

|

| |

| virtual void | resize (size_t inputs, size_t outputs, GRand *pRand=NULL, double deviation=0.03) |

| | Resizes this layer. If pRand is non-NULL, an exception is thrown. More...

|

| |

| virtual void | scaleUnitIncomingWeights (size_t unit, double scalar) |

| | Throws an exception. More...

|

| |

| virtual void | scaleUnitOutgoingWeights (size_t input, double scalar) |

| | Throws an exception. More...

|

| |

| virtual void | scaleWeights (double factor, bool scaleBiases) |

| | Multiplies all the weights in this layer by the specified factor. More...

|

| |

| virtual GDomNode * | serialize (GDom *pDoc) |

| | Marshall this layer into a DOM. More...

|

| |

| virtual const char * | type () |

| | Returns the type of this layer. More...

|

| |

| virtual double | unitIncomingWeightsL1Norm (size_t unit) |

| | Throws an exception. More...

|

| |

| virtual double | unitIncomingWeightsL2Norm (size_t unit) |

| | Throws an exception. More...

|

| |

| virtual double | unitOutgoingWeightsL1Norm (size_t input) |

| | Throws an exception. More...

|

| |

| virtual double | unitOutgoingWeightsL2Norm (size_t input) |

| | Throws an exception. More...

|

| |

| virtual void | updateBias (double learningRate, double momentum) |

| | Updates the bias of this layer by gradient descent. (Assumes the error has already been computed and deactivated.) More...

|

| |

| virtual void | updateWeights (const double *pUpStreamActivation, size_t inputStart, size_t inputCount, double learningRate, double momentum) |

| | Updates the weights that feed into this layer (not including the bias) by gradient descent. (Assumes the error has already been computed and deactivated.) More...

|

| |

| virtual void | updateWeightsAndRestoreDroppedOnes (const double *pUpStreamActivation, size_t inputStart, size_t inputCount, double learningRate, double momentum) |

| | This is a special weight update method for use with drop-connect. It updates the weights, and restores the weights that were previously dropped by a call to dropConnect. More...

|

| |

| virtual size_t | vectorToWeights (const double *pVector) |

| | Deserialize from a vector to the weights in this layer. Return the number of elements consumed. More...

|

| |

| virtual size_t | weightsToVector (double *pOutVector) |

| | Serialize the weights in this layer into a vector. Return the number of elements written. More...

|

| |

| | GNeuralNetLayer () |

| |

| virtual | ~GNeuralNetLayer () |

| |

| virtual void | copySingleNeuronWeights (size_t source, size_t dest) |

| |

| void | feedForward (const double *pIn) |

| | Feeds in the bias and pIn, then computes the activation of this layer. More...

|

| |

| virtual void | feedIn (GNeuralNetLayer *pUpStreamLayer, size_t inputStart) |

| | Feeds the previous layer's activation into this layer. (Implementations for specialized hardware may override this method to avoid shuttling the previous layer's activation back to host memory.) More...

|

| |

| GMatrix * | feedThrough (const GMatrix &data) |

| | Feeds a matrix through this layer, one row at-a-time, and returns the resulting transformed matrix. More...

|

| |

| virtual void | getWeightsSingleNeuron (size_t outputNode, double *&weights) |

| | Gets the weights and bias of a single neuron. More...

|

| |

| virtual void | setWeightsSingleNeuron (size_t outputNode, const double *weights) |

| | Gets the weights and bias of a single neuron. More...

|

| |

| virtual void | updateWeights (GNeuralNetLayer *pUpStreamLayer, size_t inputStart, double learningRate, double momentum) |

| | Refines the weights by gradient descent. More...

|

| |

| virtual void | updateWeightsAndRestoreDroppedOnes (GNeuralNetLayer *pUpStreamLayer, size_t inputStart, double learningRate, double momentum) |

| | Refines the weights by gradient descent. More...

|

| |

| virtual bool | usesGPU () |

| | Returns true iff this layer does its computations in parallel on a GPU. More...

|

| |

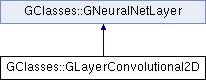

Public Member Functions inherited from GClasses::GNeuralNetLayer

Public Member Functions inherited from GClasses::GNeuralNetLayer Static Public Member Functions inherited from GClasses::GNeuralNetLayer

Static Public Member Functions inherited from GClasses::GNeuralNetLayer Protected Member Functions inherited from GClasses::GNeuralNetLayer

Protected Member Functions inherited from GClasses::GNeuralNetLayer